Crime Scaled by Confidence

One of the most important shifts happening right now isn’t technical – it’s psychological.

AI is lowering the barrier to entry.

Underground ads increasingly target people who don’t see themselves as “real hackers” First-timers. Curious opportunists. People intimidated by command lines and exploit chains. AI removes that fear.

Phrases like “no experience needed,” “AI handles everything,” and “just provide the target” appear constantly. The promise is simple: you don’t need to understand what’s happening – just follow instructions.

The result isn’t necessarily smarter attacks – it’s more attacks.

AI Sets Sail into a Blue Ocean: Expanding the Pool of Victims

There’s a long-standing belief that early phishing emails were deliberately terrible. Fraudsters weren’t trying to trick people who could spot bad grammar or awkward sentence structure – they were filtering for victims who wouldn’t question obvious red flags.

That’s why inboxes were once filled with messages like:

These emails weren’t sloppy by accident. They were intentionally ridiculous, a crude but effective way to pre-select the most vulnerable targets.

Today, that filter is gone.

Modern fraud emails are polished, fluent, and convincing. Thanks to generative AI, scammers no longer need to rely on broken language to find victims. Instead, they can produce near-perfect messages at scale – tailored, localized, and emotionally persuasive.

What was once a red ocean of obvious scams has quietly turned blue, not because fraud disappeared, but because it became far harder to recognize.

Why This Should Worry Everyone

There’s no sign that AI has suddenly turned cybercriminals into unstoppable geniuses. There are no dramatic new attack classes hiding behind the hype.

But something else is happening – something quieter and potentially more dangerous.

AI is making cybercrime feel easy.

By encouraging people to act without fully understanding what they’re doing, vibe hacking normalizes reckless behavior. It rewards speed over caution and confidence over comprehension.

That mentality doesn’t just affect criminals. It mirrors the same risks seen in legitimate environments: over-automation, blind trust in AI output, and reduced human oversight.

The underground isn’t waiting for perfect AI. It’s already comfortable acting on imperfect results – and that’s enough to scale abuse.

Combating Cybercriminal Use of AI

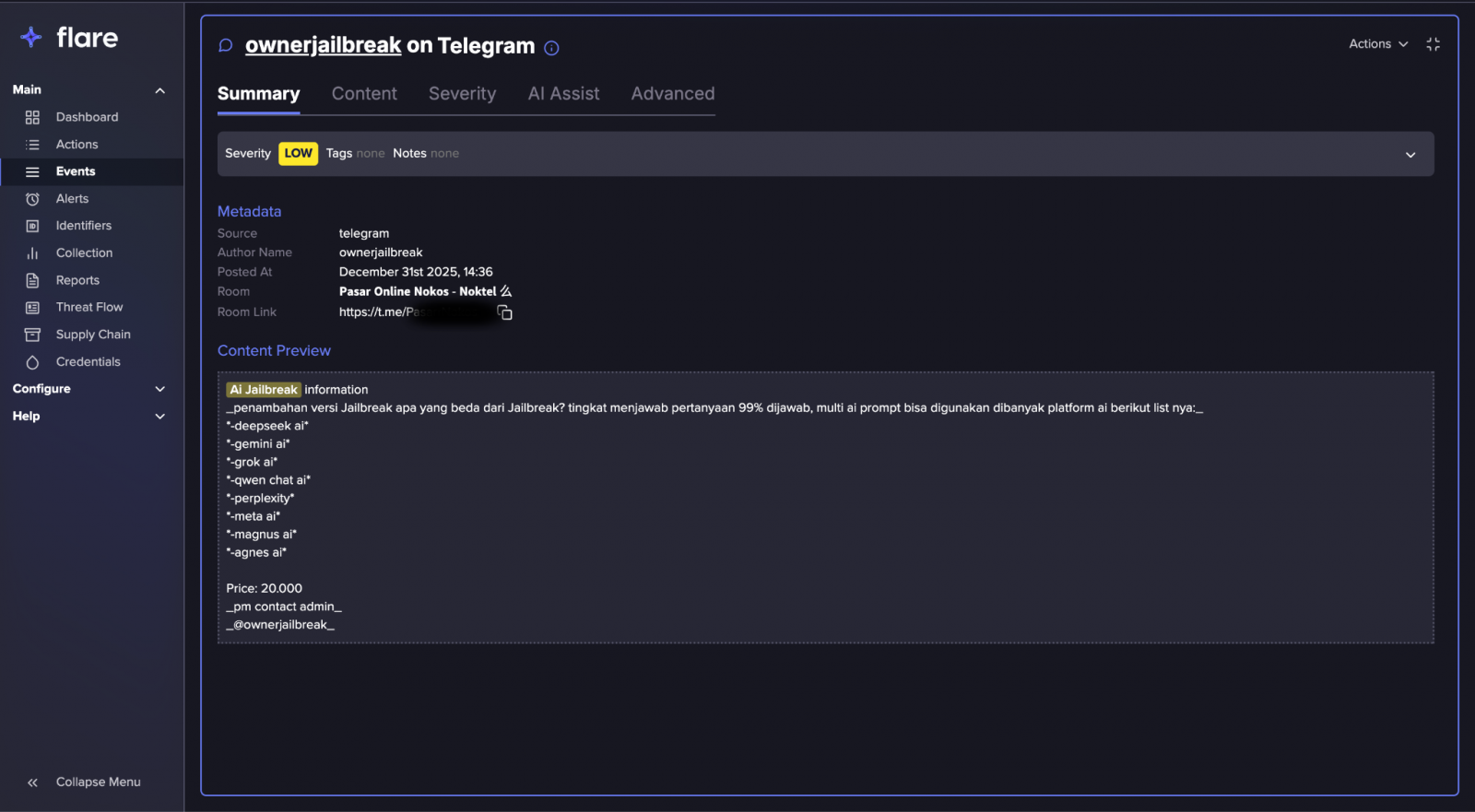

This is where Flare‘s platform becomes critical. By continuously monitoring dark web forums, Telegram channels, underground marketplaces, and paste sites, Flare surfaces early signals around AI jailbreak techniques, prompt-injection abuse, malicious LLM workflows, and the commercialization of “Hacking-GPT” style tools.

In a nutshell, this is the difference between a reactive defense to proactive defense.

Instead of reacting to a new prompt injection threat or AI-assisted fraud after they reach victims, Flare exposes how these techniques are discussed, packaged, tested, and sold before they scale – giving defenders visibility into attacker mindset, emerging abuse patterns, and the real-world exploitation paths hiding behind AI hype.

Final Thoughts

AI hasn’t reinvented cybercrime.

What it has done is change how cybercriminals think about themselves.

In cybercrime spaces, AI is no longer just a tool. It’s permission. A way to say, I don’t need to know everything – I just need it to work.

Vibe hacking isn’t about better code or smarter exploits. It’s about confidence without understanding. And right now, that confidence is spreading fast.

In 2026, all hackers want is AI – not to master the craft, but to skip it.

Track AI-powered cyber threats in real time. Start a free Flare trial.

Sponsored and written by Flare.